Confirmation bias is the tendency to process information by looking for, or interpreting, information that is consistent with one’s existing beliefs1. This biased approach to decision-making is largely unintentional and often results in ignoring inconsistent information. Existing beliefs can include one’s expectations in a given situation and predictions about a particular outcome. People are especially likely to process information to support their own beliefs when the issue is highly important or self-relevant.

In a previous article, I outlined the differences between two distinct cultures – compliance and commitment. This post describes how a confirmation bias can perpetuate a culture of compliance. I will also discuss how the conversations that take place in a work place with a culture of commitment minimize the potential for confirmation bias.

Culture of Compliance

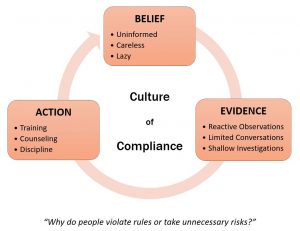

The model below explains how confirmation bias influences decision-making (and the actions taken by managers) when an organization is managed through compliance.

It begins with a person’s existing beliefs.

Supervisors and managers know that one reason a person could violate a rule, policy, or procedure is if they are uninformed (because of poor training or lack of experience).

However, if a subsequent conversation with this employee reveals that he has done this specific task many times before and/or has been trained, a supervisor who is part of a culture of compliance often shifts to another explanation. He concludes that the reason this person ignored a rule, took an unnecessary risk, or made a mistake is because of poor work habits. For example, the employee might be labeled as careless, inattentive, or lazy.

By reaching this conclusion, this supervisor is likely making a fundamental attribution error. Indeed, many of us are prone to making this error – because we often attribute people’s behavior to ‘the way they are’, rather than to ‘the situation they are in.’

Confirmation bias emerges when the supervisor seeks to validate his existing beliefs by looking for supporting evidence. When seeking this information, the supervisor will tend to look for evidence that confirms that his conclusion is true – rather than pursuing information that could prove his view is inaccurate. In a culture of compliance, the supervisor obtains this supporting evidence through mostly cursory methods. These include:

- making observations only after an event has occurred

- initiating brief, one-sided conversations with employees that are mostly directive in nature

- conducting “check-the-box” incident investigations that fail to uncover root causes

The action taken by the supervisor is contingent upon the evidence that he or she obtains. If his or her assessment reveals that a genuine lack of experience or knowledge has resulted in a rule or policy violation, the employee will be given the appropriate job training.

But what happens if the individual has received sufficient training? Or he has performed the task successfully many times? And yet this person still does not follow a rule, policy, or procedure (or takes an unnecessary risk)? In a culture of compliance, the supervisor’s belief system leads him to a make a decision that is frequently ill-advised. He reasons that since the employee knows how to do the task, he must be careless, lazy, or inattentive. Therefore, the only way to change this unacceptable behavior is through counseling or discipline.

By taking this action, the supervisor sincerely believes he is fulfilling his obligation of “holding people accountable”. After all (he believes), conscientious employees who are sufficiently trained would never violate a rule, policy, or procedure. Likewise, any reasonable individual certainly would not take an unnecessary risk. Armed with these beliefs, the supervisor expects that if employees are trained, only those with a poor work ethic (careless, lazy, inattentive, etc.) will violate a rule or take a risk.

(In fact, people may violate a rule or take a risk for reasons that have nothing to do with personal attributes. Social influences, procedural drift, and multi-tasking are just a few factors to consider).

Indeed, my belief system in this scenario is continuously reinforced because of my confirmation bias:

- I believe that some people could violate a rule because they are uninformed. However, most rule-breakers and risk-takers are either lazy or careless.

- My “evidence” is based upon the logic that if a person is trained and given clear expectations, there is no reason for them to violate a rule or take an unnecessary risk.

- I address most non-compliance through warnings or counseling.

- Assuming these persons have received training, the next (lazy) rule-breaker or (careless) risk taker that I encounter will need discipline to correct this behavior.

One way to break this cycle is to look for information (evidence) in a way that objectively considers alternative reasons for risk-taking or errors. We will examine this approach next.

Culture of Commitment

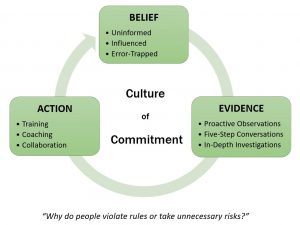

Let’s look at the decision-making model that is prevalent in a culture of commitment.

Supervisors who are part of a culture of commitment also hold certain beliefs. However, many of these beliefs are notably different when compared to those in a culture of compliance. Why? They have been developed through a set of experiences that are unique to a culture of commitment. These beliefs are rooted in a deeper understanding of human fallibility, as well as our susceptibility to being influenced to take risks.

Regardless of the culture in which they work, most supervisors actually share a common belief. They (correctly) believe that a primary reason anyone could potentially violate a rule, policy, or procedure is that the employee is uninformed.

But this is where the two belief systems diverge.

In a culture of commitment, if it is confirmed that the employee is knowledgeable and has been trained to perform the task, the supervisor’s experience yields a different set of beliefs regarding these undesired behaviors. He realizes that most of the time if an employee ignores a rule, takes an unnecessary risk, or makes an error it is because the worker is:

- influenced to take a risk3

- set up to make a mistake (because of a situation referred to as an error trap2)

The supervisor will seek to validate his beliefs by looking for evidence. However, unlike someone who manages through compliance, the supervisor who fosters a culture of commitment is more diligent and objective in his search for evidence. As a result, confirmation bias is much less likely to occur.

Methods that are used to obtain the supporting evidence include:

- making proactive observations to understand the situations and mindset of employees

- facilitating structured, personal conversations with employees that encourage shared learning

- conducting detailed incident investigations that are designed to uncover true root causes

Collecting evidence in this way is crucial. These discoveries frequently support his belief system about the causes for errors and/or risk-taking.

As before, the action taken by the supervisor is contingent upon the evidence that he or she obtains. If he uncovers a lack of experience or understanding by the employee, further training on how to perform the specific tasks of the job is warranted.

However, what if the individual is adequately trained? In a culture of commitment, the supervisor knows that over 90% of human error and many at-risk behaviors are a result of process or organizational flaws4.

The supervisor may decide to do any number of things. All the actions listed below are preceded by a sincere, caring conversation with the employee, where an assessment is made to determine the root cause:

- The employee could have been influenced to take a risk or violate a rule because of a false perception or a risk-taking habit. In this case, the supervisor will engage in coaching or counseling.

- Perhaps the employee was influenced to take a risk or violate a rule because of a physical obstacle or barrier. If so, the supervisor will seek to collaborate with the employee on removing this source of influence.

- When someone makes a mistake, the supervisor will look for potential error traps. He will mitigate these situations or implement mistake-proofing solutions with input from the employee.

These actions reinforce the commonly held belief system in a culture of commitment that employees are often influenced to take risks and are set up to make mistakes.

To summarize this decision-making model:

A virtuous cycle is created where employees are given the “benefit of doubt” on why they violated a rule or had taken a risk. This is the foundation of the supervisor’s belief system. The evidence nearly always reveals the root cause as influences and/or error traps. The actions that are taken reflect a genuine caring for the employee – as well as a desire for learning and improvement.

To compare and contrast how these decision-making models work, let’s look at a case study. The following is based on an actual event.

Case Study – The Missing Gloves

One of the work rules at a large food processor is to always wear latex gloves whenever handling any ingredients. This is essential not only to protect the employees’ hands, but as a hygiene and food safety requirement.

One of the work rules at a large food processor is to always wear latex gloves whenever handling any ingredients. This is essential not only to protect the employees’ hands, but as a hygiene and food safety requirement.

Although every new hire was informed about this rule and gloves were available, some employees were occasionally observed not wearing gloves. To encourage compliance, supervisors issued verbal or written warnings. But the problem persisted.

At the same time, the plant was faced with periodic episodes of plastic contamination in their food products. The employees were instructed to be more attentive when processing the raw materials. They were told to be sure that plastic was not allowed to enter the production system. Moreover, it was made clear to the employees that disciplinary action would be taken if anyone was found to be careless in allowing any plastic to enter the production process in their work area.

It turns out these two events were related.

The connection was made when an analysis of the plastic contaminants in the food showed that some were made of latex. The color of these foreign materials also matched the color of the gloves.

Employee interviews revealed an astonishing contradiction. The workers knew they were required to either wear the gloves or receive a reprimand – that much was clear. However, they also pointed out that if plastic of any kind was found in your production line, you could also be disciplined. The punishment for a “plastic contaminate violation” was more severe than that for “glove non-compliance” because the financial losses associated with contamination were very large.

In addition, there was only one size of glove (XL) available for everyone. And the smaller employees (mostly women) could not wear these gloves, as they would often fall off! It was a true conundrum.

What did these employees do? They assessed which policy or rule to violate that would minimize the severity of the potential discipline – and they often chose not to wear the gloves when they thought that no one was looking. (They would wear the over-sized gloves when a supervisor was present, then remove them after he left the area).

In this culture of compliance, the area supervisor held a belief that the reason these women did not wear gloves was that they were careless, lazy, inattentive, etc. He used confirmation bias to gather evidence through brief, directive conversations. Because his cursory investigation didn’t uncover a reason for the employees not to wear the gloves (they knew the rule and the gloves were available), the supervisor took action by either counseling them or issuing discipline.

In the decision-making cycle for a culture of commitment, there would have been a much different outcome. In this situation, the supervisor would have realized that people violate rules for a number of reasons. A deeper conversation (initiated because he cared about his employees) would have almost certainly revealed the obstacle of ill-fitting gloves as a legitimate reason the employee violated this policy. The supervisor may have also discovered a number of error traps that could contribute to the mistake of gloves ending up in the process (e.g., rushing, frustration, the environment). His actions would have likely centered on collaboration with the employees to address these flaws.

In this case, the solution is obvious. Provide gloves in enough sizes to fit everyone comfortably so they can do their jobs. However, these kinds of positive outcomes are only possible if supervisors engage their employees in dialogues that are free from confirmation bias.

Conclusion

What kind of conversations are you having with your employees? Unless your conversations are rooted in caring (and they are conducted in a manner that is consistent with a culture of commitment), you are susceptible to confirmation bias. This unintentional decision-making can keep you in a state of managing for compliance, where the principal objective is to maintain or exert control.

To foster learning and improvement, you need to ask questions (and find answers) that reveal the real reasons why people violate rules or take risks. Do you have the courage to look for evidence or gather information that could challenge your existing belief system?

It starts with being sincere and diligent in the conversations you have with others.

References

- https://www.britannica.com/

- Error Elimination Tools. Practicing Perfection Institute, Inc. 2016. www.ppiweb.com

- Understanding Influences on Risk – A Four-Part Model. Terry Mathis and Shawn Galloway. February, 2010.

- Out of the Crisis. W. Edwards Deming. Massachusetts Institute of Technology. Cambridge, MA. 1986.